Ollama Windows 0.9.3

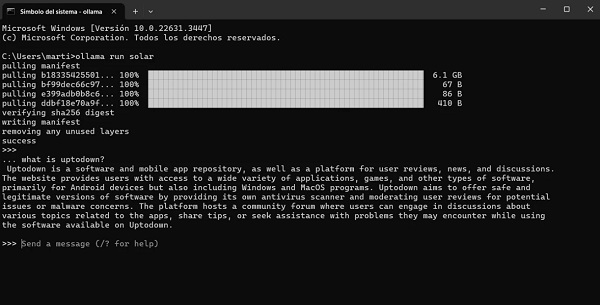

Ollama Windows 0.9.3 is a modern, developer-friendly platform for running large language models (LLMs) locally on your machine, now available for Windows.

Ollama Windows 0.9.3 Description

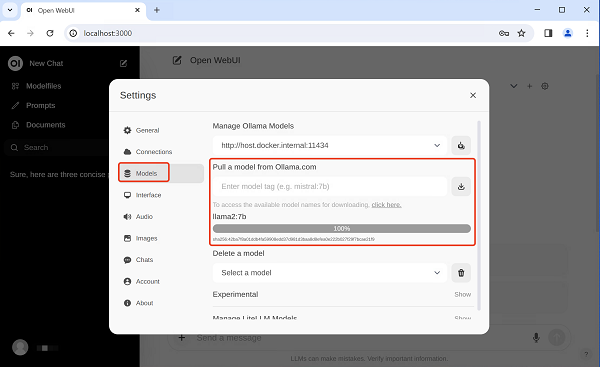

It allows users to download and run models like LLaMA, Mistral, Gemma, and others directly on their device without needing a cloud connection or API. With the Ollama Windows release, Ollama brings its streamlined LLM runtime environment to a broader range of users, including developers, researchers, and privacy-conscious users who prefer local processing. Ollama for Windows 2025 is a lightweight, developer-focused, and privacy-respecting way to run LLMs on your local machine. It simplifies AI model usage while giving you full control — no API keys, no cloud costs.

Features of Ollama Windows 0.9.3

1. Run LLMs Locally

-

Supports running open-source models such as:

-

LLaMA 2, Mistral, Gemma, Phi-2, Code LLMs, etc.

-

-

No internet required after initial model download.

-

Works offline – excellent for privacy and control.

2. Cross-Platform Support

-

Initially available for macOS, now officially supports Windows.

-

Linux support also available.

✅ 3. GPU Acceleration (Coming Soon on Windows)

-

GPU support is planned but still limited on Windows as of mid-2025.

-

CPU execution works smoothly for small to medium models.

✅ 4. Developer Integration

-

Local HTTP API for programmatic access:

-

Useful for chatbots, agents, AI assistants, code copilots, etc.

-

Similar API to OpenAI’s — easy to switch.

-

Password For File ” 123 “